(This item is moved here from the ODK Geo roadmap document.)

The idea here is to allow the enumerator to collect several points and use their average as the result. By waiting for several points to be collected, the enumerator can improve the accuracy of the final result.

Some discussion is needed as to the exact featureset and UI.

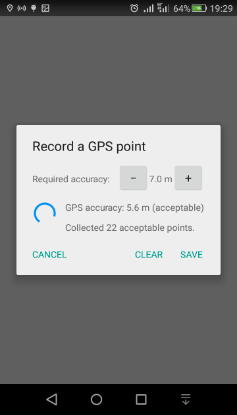

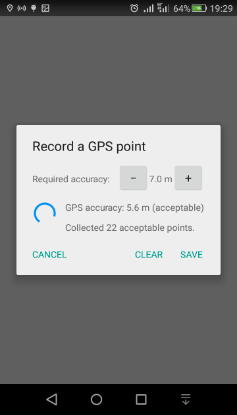

To prompt discussion, here's an example of a previous implementation:

In this Points are continuously collected, and the user sees continuous feedback on the accuracy level. During collection, the user can adjust the desired accuracy threshold (which defaults to 5 m) to include more points or fewer points. The dialog gives feedback on the number of collected points so far that meet the threshold. When the user is happy with the number of points and the accuracy setting, they can tap "Save", which computes the average of all the points that meet the threshold.

This is just an example—everything is open for discussion!

@danbjoseph previously asked these questions about this (I'm

copying them here to consolidate them into this thread):

It's great that you are continuing to improve and build out the geo functionality of ODK Collect!

Would this be a control that is toggled in the survey form? Automatically something that the user would be able to do for a geopoint question? Or a new geo question type?

If the user adjusts the accuracy down/up, does it affect which points are included in the calculation looking at all those that have been collected since the question started? Or does it only affect which points are stored and used for the calculation going forwards?

I find the previous implementation example a little confusing. Is the "required accuracy" for the individual points or for the averaged location? Is the "GPS accuracy" the calculated average accuracy or the accuracy of the current sensor reading? Would it be useful to know the total number of collected points in addition to the number of acceptable points?

And this was my reply:

Thanks for these questions!

We're thinking of this as a feature of a GeoPoint question. Whether it's enabled by the app user or by the form designer (or both) is not yet determined.

In the example:

- The GPS accuracy shown is the accuracy of the current sensor reading.

- The "required accuracy" threshold is for the individual points.

- When "required accuracy" is adjusted, it changes which of the already collected points are included in the average. In other words, all the points are stored, and all of them are filtered according to this adjustable parameter.

That's how this particular prototype implementation worked, though I don't claim that it's the best design. It does give you a clue as to how it works because you can see how the number of acceptable points changes when you adjust the accuracy threshold, but still, I can see how the display would be ambiguous for someone who wasn't familiar with it.

I would be careful, and want to do more homework, before attempting to improve the accuracy/reduce the error of a GPS location by 'averaging' multiple samples. Specifically, due to the nature of GPS, taking multiple samples (from the same device) in a short period of time may in fact not improve the actual accuracy as desired, due to the principle sources of error involved; eg see https://gis.stackexchange.com/questions/25798/how-much-sense-does-it-make-to-average-lat-lon-samples-in-order-to-increase-2d

I'm certainly not a GPS expert (and my high-level calculus is a bit lacking) but my interpretation is that to improve accuracy you really need to average samples over a non-trivial period time (eg 10's of minutes) to let the satellites involved substantially change their position. Again, I would want to really do some serious homework around GPS error before assuming a average of, say, 10 GPS fixes will reduce the "required accuracy" by some percentage... A GPS fix isn't merely a throwing a dart or necessarily obey a nice random noise normal distribution, so a simple statistical average might not apply (and doing so could incorrectly assert an accuracy that is not in fact true).

There is an old discussion at Show satellites and time elapsed in geopoint dialog that gets into the weeds on this topic.

I haven't been a fan of averaging, but @Ivangayton emailed me a few days back and that changed my mind. He said,

Averaging is still used a lot in professional surveying (expensive Trimble and Leica GPS units often use some form of point averaging, and a lot of formal surveying contracts actually specify the number of points that must be collected at a single location to be considered a valid point), and there's good evidence for some slightly more sophisticated algorithms. Fuzzy averaging is one, 50% radius is another, and there are a few more out there.

Bottom line: I don't suggest that averaging should be mandatory, nor should it be presented as a sure answer to precision and accuracy concerns. However, I do think that it's a nice option to offer users, as those with the sophistication to understand the pros and cons of averaging will likely be happy to have the option open to them.

If folks want averaging, so be it, but I think it should be opt-in, the algorithm needs to be specified, and the documentation needs to be clear about what it is and isn't.

I agree (that is, I dont disagree...  ). I certainly think averaging a bunch of GPS fixes isnt going to give you a worse measurement than you are already getting. My concern is taking a simple average of several data points and then using it to assert an quantitative improved "accuracy" - ie reduced GPS error - of a (single) resulting average measurement, without being mathematically rigorous about it.

). I certainly think averaging a bunch of GPS fixes isnt going to give you a worse measurement than you are already getting. My concern is taking a simple average of several data points and then using it to assert an quantitative improved "accuracy" - ie reduced GPS error - of a (single) resulting average measurement, without being mathematically rigorous about it.

So I think a priori setting a required threshold, eg +/- 7m, and taking an average of, say, 22 readings that satisfy this is OK, provided the result is still reported as "+/- 7m". Its only when taking an average of multiple data point to quantitatively assert better accuracy that you need to tread carefully... Does that make sense?

Agreed. I don't think it's a goal to assert a lower value for the 68% confidence radius. The level of functionality to aim for should probably be that provided by the averaging feature in other handheld GPS units. Apparently, some surveying contracts specify a requirement for a certain number of points to be collected and averaged.

I absolutely agree with this! It's certainly not defensible to claim greater accuracy or precision because of averaging, especially over a short time period. You might be able to make some claims about precision if you used a more sophisticated algorithm than averaging (throwing out outliers is actually a pretty good start), but I'd still be most comfortable with your suggestion of continuing to report the accuracy of the individual points rather than some kind of extrapolated smaller error. When I mentioned the Trimble/Leica GPS units and professional surveys that specify a number of points to be included in a position, I should have specified they don't make any claims about accuracy, they just want the number of points, and ideally the metadata including Dilution of Precision and maybe even satellites involved for each individual point.

In terms of use-cases, as you say, if you actually leave a phone sitting in a single place for a couple of hours the satellite constellations will shift and the errors will be more random. In this case the error will almost certainly be substantially lower, and a some users (the kind of people who are already interested in leaving a phone in a single spot for many hours) can use their own judgment to make whatever determinations they can about the accuracy. It won't be useful for everyone, but some of us are going to like it, and it's relatively cheap in terms of development cost.

Though not high-priority. Nice to have, but wouldn't bump many other features to work on it!

Its rather an interesting quandary... The stated goal of this feature is quite noble, and legitimate [emphasis added]:

By waiting for several points to be collected, the enumerator can improve the accuracy of the final result.

Which makes perfect sense and appears logically sound. Yet we're not actually going to quantitatively report a better accuracy - ie smaller error - in the actual result! So users may well wonder "Huh!? Why am I still only getting +/- 7m?  "

"

I'm not sure how best to explain why they should bother waiting...

1 Like