I´m going to try Mitch, for me this is dancing on thin ice... let´s see...!

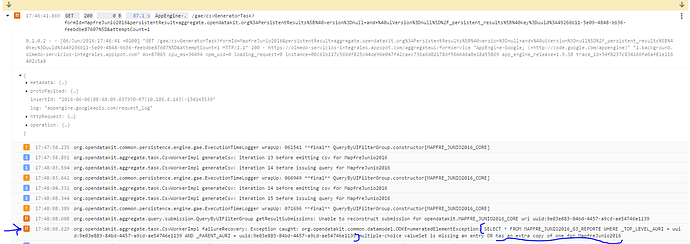

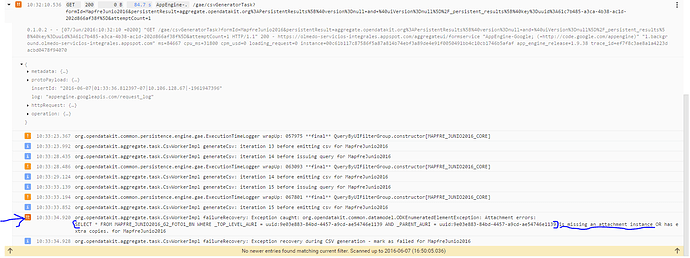

I.e.,

org.opendatakit.aggregate.task.gae.servlet.UploadSubmissionsTaskServlet

doGet: org.opendatakit.aggregate.exception.ODKExternalServiceException:

org.opendatakit.common.datamodel.ODKEnumeratedElementException: SELECT *

FROM MAPFRE_JUNIO2016_G3_REPORTE WHERE _TOP_LEVEL_AURI =

uuid:9e03e883-84bd-4457-a9cd-ae54746e1139 AND _PARENT_AURI =

uuid:9e03e883-84bd-4457-a9cd-ae54746e1139 multiple-choice valueSet is

missing an entry OR has an extra copy of one

and then, on the datastore tab,

entering in the query box:

SELECT * FROM opendatakit.MAPFRE_JUNIO2016_G3_REPORTE WHERE

_TOP_LEVEL_AURI = "uuid:9e03e883-84bd-4457-a9cd-ae54746e1139" AND

_PARENT_AURI = "uuid:9e03e883-84bd-4457-a9cd-ae54746e1139"

you'll find 2 entries with matching _ORDINAL_NUMBER values (1).

Per the instructions (step 6 & 7), delete the older one of these.

On Mon, Jun 6, 2016 at 9:39 AM, Pablo Rodríguez < nuncaestardes...@gmail.com> wrote:

Well Now in another project I´m having the very same issue. wort timing

ever. In the middle of a big running project :S

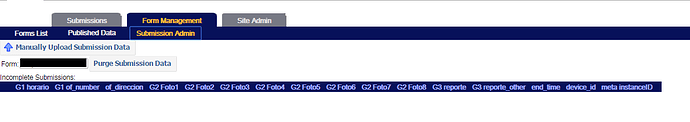

There are not incomplete submissions according to ODK.

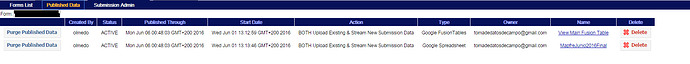

I was publishing a google spreadsheet and a fusion table (Running in

google app engine with billing enabled)

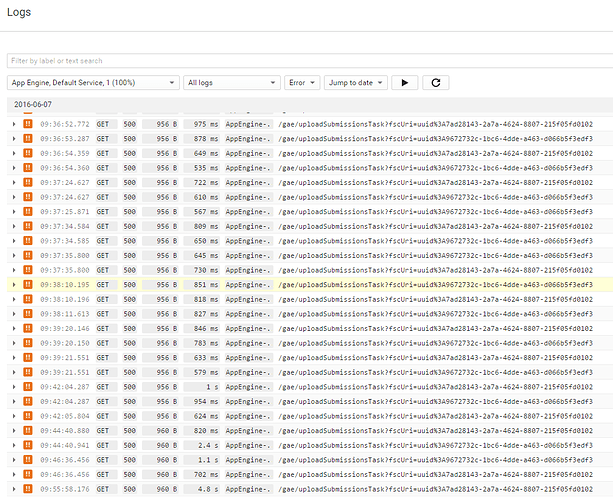

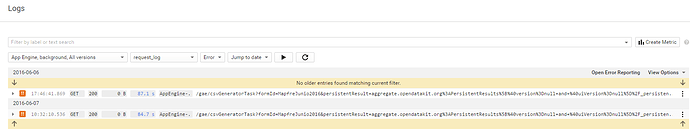

I´m looking at the log and I´m having an average of 4 to 7 error per

minute, basically like this:

18:14:55.688GET500956 B550 msAppEngine-Google; (+

http://code.google.com/appengine)/gae/uploadSubmissionsTask?fscUri=uuid%3A9672732c-1bc6-4dde-a463-d066b5f3edf3

0.1.0.2 - - [06/Jun/2016:18:14:55 +0200] "GET

/gae/uploadSubmissionsTask?fscUri=uuid%3A9672732c-1bc6-4dde-a463-d066b5f3edf3

HTTP/1.1" 500 956

http://olmedo-servicios-integrales.appspot.com/gae/watchdog

"AppEngine-Google; (+http://code.google.com/appengine)" "

olmedo-servicios-integrales.appspot.com" ms=550 cpu_ms=231

cpm_usd=2.5548e-7 loading_request=0

instance=00c61b117c327d751511c82e8ba427e6081789412cae8a6b2a33f690566e8b31

app_engine_release=1.9.38 trace_id=-

{

metadata: {…}

protoPayload: {…}

insertId: "2016-06-06|09:15:00.884913-07|10.106.162.86|1409825575"

log: "appengine.googleapis.com/request_log"

httpRequest: {…}

operation: {…} }

Seaching for OutOfMemory in the log and I don´t find anything.

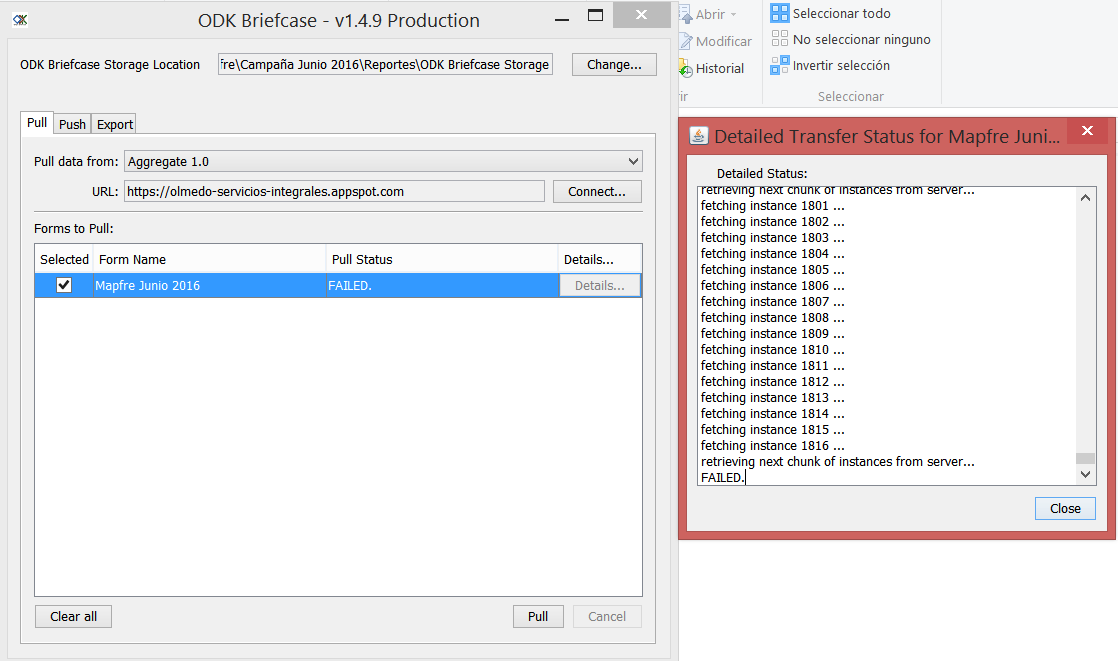

Right now I just pulled all the information with Briefcase, and As far

as I can see I have more than what I had in my published tables.

My biggest concern is that I could loose submissions :S.

Any help is very appreciated.

Regards,

On Fri, May 6, 2016 at 6:22 PM, Mitch Sundt mitche...@gmail.com wrote:

This would indicate a data corruption issue.

See these instructions:

https://github.com/opendatakit/opendatakit/wiki/Aggregate-AppEngine-Troubleshooting#reparing-a-filled-in-form-submission

w.r.t. ODK Briefcase starting to pull data from the beginning every

time, if, after you pull data, you issue a push back up to the same server,

this will set tracking flags such that those records will be ignored and

skipped over the next time you pull.

ODK Briefcase stops at the first corrupted record because it is fetching

data in ascending marked-as-complete timestamp order. Until the corrupted

record is repaired or removed, it will not proceed to other records.

Data corruption is more likely to occur if your server memory is

becoming exhausted or if you are submitting data over very-low-bandwidth

communications channels (e.g., satellite).

On Fri, May 6, 2016 at 5:52 AM, nuncaestardes...@gmail.com wrote:

El viernes, 6 de mayo de 2016, 12:30:39 (UTC+2), nuncaestardes...@gmail.com escribió:

El martes, 3 de mayo de 2016, 14:10:28 (UTC+2), nuncaestardes...@gmail.com escribió:

Hi guys,

I´m kind of new here, but I already have this problem:

On ODK Aggregate, when I want to export my data to .csv file, It´s

showing me an error (Failure - will retry later). it was working fine but I

guess the DDBB reach a certain volume that made it fail.

I have read that it is related to global max_allowed_packet which

has to be set to a higher value, with the following expression

set global max_allowed_packet = 1073741824;

I have my ODK aggregate deployed in a google app engine, so i tied

to type that in the cloud shell but nothing happened, I still have the same

problem, and as you can imaging it´s the worst moment to happend...

I have a excel macro that reads the csv file and download the media

files, renames and save everything in a file, so then i can open each

picture from a link in an excel spreadsheet.

Now I can only download the the CSV using ODK briefcase by:

1- Pulling all the data.

2- Exporting the CSV (I can only do it if I firstly pull the data)

The problem is that by doing this I have to download the media files

twice (in briefcase and in my macro), and the CSV file exported by

briefcase contains the local link in my computer to the media files, but

not the cloud link to the media files, which in my case it is super

convenient. I figure out that the link to the media is a concatenation of

the ID number and some other things, so I manage to bypass this problem,

but I had to change my whole procedure in the middle of the work, making it

much more manual and not allowing me to use the tools I developped to

automate all this work.

Anyone knows how can I in ODK aggregate deployed in google app

engine execute this procedure?

set global max_allowed_packet = 1073741824;

IF that really is the problem, which i hope it is.

Thank you very much in advance.

Regards,

Pablo

One more thing, everytime I pull information with briefcase it

downloads the whole project instead of just downloading the new

entries,that could be something easy to improve in future versions, my

excel macro that downloads everything from the csv does that it is muc more

convenient this way.

Regards,

Pablo

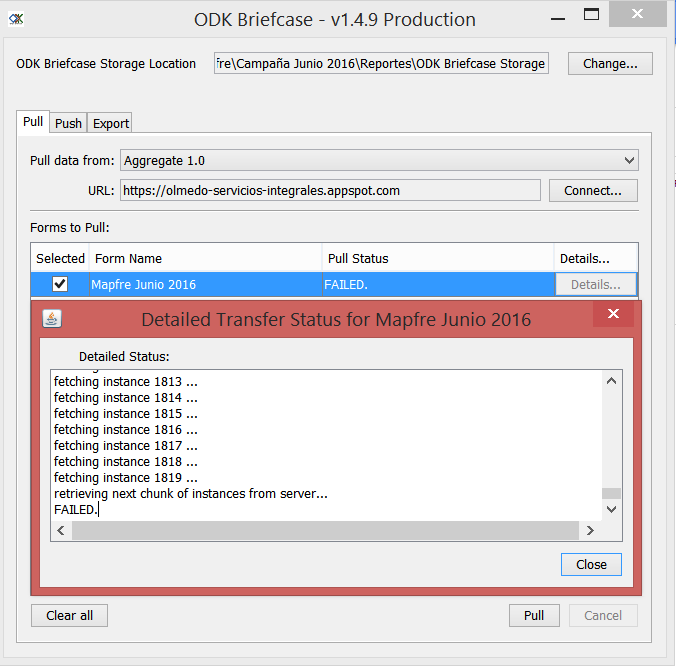

I am trying to pull all the information with briefcase, and looking at

the log I have this:

...

fetching instance 3048 ...

fetching instance 3049 ...

fetching instance 3050 ...

fetching instance 3051 ...

fetching instance 3052 ...

fetching instance 3053 ...

retrieving next chunk of instances from server...

FAILED.

I don´t know if it failed because there is nothing more to pull or

because actually there is something elses happening... right now I don´t

know how many entries I have so 3053? I don´t know... the filter in

aggregate does not work so i don´t know how could I do this...

--

--

Post: opend...@googlegroups.com

Unsubscribe: opendatakit...@googlegroups.com

Options: http://groups.google.com/group/opendatakit?hl=en

You received this message because you are subscribed to the Google

Groups "ODK Community" group.

To unsubscribe from this group and stop receiving emails from it, send

an email to opendatakit...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

--

Mitch Sundt

Software Engineer

University of Washington

mitche...@gmail.com

--

--

Post: opend...@googlegroups.com

Unsubscribe: opendatakit...@googlegroups.com

Options: http://groups.google.com/group/opendatakit?hl=en

You received this message because you are subscribed to a topic in the

Google Groups "ODK Community" group.

To unsubscribe from this topic, visit

https://groups.google.com/d/topic/opendatakit/hjMKcHkk1tc/unsubscribe.

To unsubscribe from this group and all its topics, send an email to

opendatakit...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

--

--

Post: opend...@googlegroups.com

Unsubscribe: opendatakit...@googlegroups.com

Options: http://groups.google.com/group/opendatakit?hl=en

You received this message because you are subscribed to the Google

Groups "ODK Community" group.

To unsubscribe from this group and stop receiving emails from it, send

an email to opendatakit...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

--

Mitch Sundt

Software Engineer

University of Washington

mitche...@gmail.com