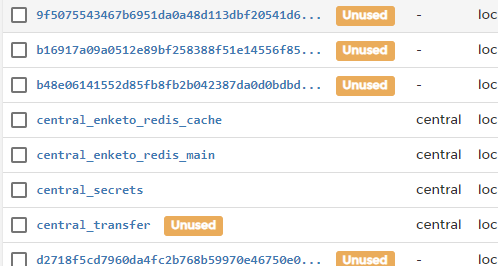

- Installed 1.0 over a running 0.9.

- docker ps all looks healthy

- Tried to add user (why are data and setting not made persistent in Docker?)

Error as below. Reverted to 0.9

root@ubuntu-server:~/central# docker-compose exec service odk-cmd --email my.name@myname.de user-create

prompt: password: ********

error: insert into "actees" ("id", "species") values ($1, $2) returning * - relation "actees" does not exist

at Parser.parseErrorMessage (/usr/odk/node_modules/pg-protocol/dist/parser.js:241:15)

at Parser.handlePacket (/usr/odk/node_modules/pg-protocol/dist/parser.js:89:29)

at Parser.parse (/usr/odk/node_modules/pg-protocol/dist/parser.js:41:38)

at Socket.<anonymous> (/usr/odk/node_modules/pg-protocol/dist/index.js:8:42)

at Socket.emit (events.js:203:13)

at addChunk (_stream_readable.js:294:12)

at readableAddChunk (_stream_readable.js:275:11)

at Socket.Readable.push (_stream_readable.js:210:10)

at TCP.onStreamRead (internal/stream_base_commons.js:166:17) {