Thanks for writing this up @martijnvaandering and for the interest in possibly implementing webhook support. The first step will be to do some requirements gathering to really understand the workflows that you and others want to support and to drive out where webhooks would fit in in relation to other data sharing options there are currently.

Here are solutions that people currently use for data sharing from Central to other systems:

- OData connection from PowerBI/Excel/Tableau. This can be configured entirely through those tools' UIs including basic auth. Refresh is done via polling either on demand or periodically. The full data document is downloaded each time. See docs

- JSON request (OData document). A script or service can request this periodically similarly to PowerBI/Excel/Tableau above. It can either ignore types or get schema information from the OData metadata document like OData consumers, from the simplified schema endpoint, from the XForm, from the XLSForm.

- JSON request using a

$filter on updatedAt to only request new submissions. Similar to above but requires maintaining state. E.g. pyODK: Using cursors to efficiently pull new data only

- CSV request. Similar to JSON above, can also be filtered, but usually a less convenient format than JSON.

- Raw XML submission request. Usually this will not be as convenient as the methods listed above for building integrations.

The common thread with all of these above is that they require some system to poll Central.

Push vs. pull

The idea with webhooks is that Central could push notifications to external systems instead. There are always tradeoffs between pushing and polling. Having Central push updates is attractive to external systems because it may save them bandwidth and complexity. However, that comes at the cost of additional complexity for Central. Implementing webhooks well requires having strategies for retrying failed calls, stopping gracefully in the case of misbehaving target services, logging attempts to contact target services, etc.

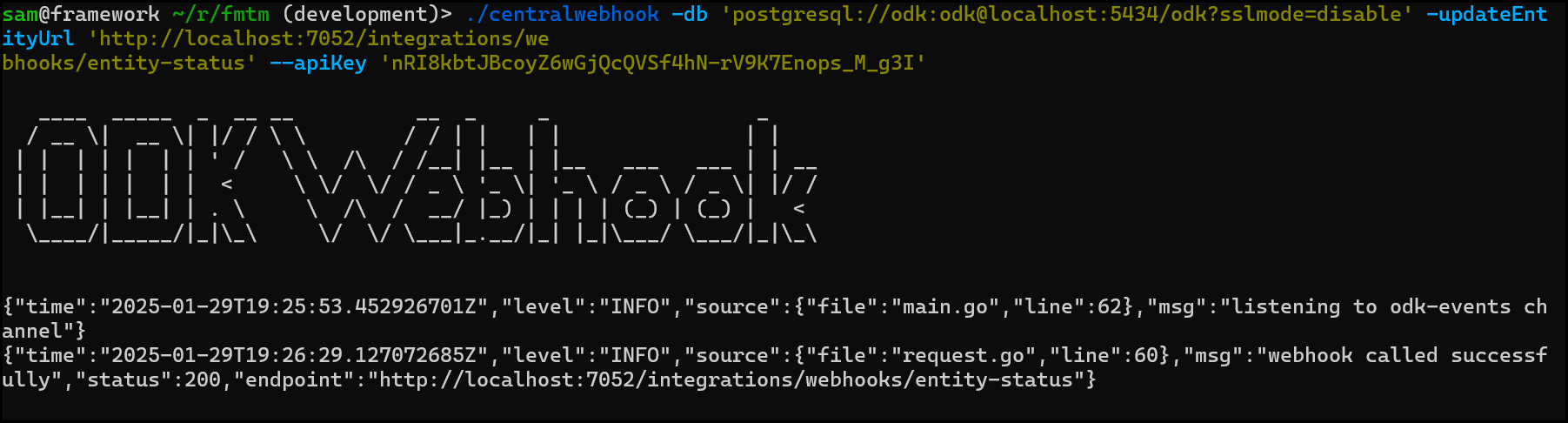

One way to mitigate the downsides of polling are to introduce a middleware service that polls Central from the same machine and then makes webhook calls to target remote services. That middleware component can handle business logic around e.g. what to do in case of failure. This can be a small custom service (with the downside that this requires software development) or something like OpenHIM (which may introduce more complexity than desired). There are also no or low code solutions for this like Zapier, If This Then That, Activepieces (open source), OpenFn.

The tradeoffs between pushing and polling also depend on the specific needs. For example, if you have a dashboard that needs to be updated once a day, polling daily may be a strictly better choice. If your dataset is relatively small (something like <10,000 submissions x 200 questions), many efficiency considerations won't really matter.

How you can help

All of this said, for anyone interested in webhooks including @martijnvaandering and @ibrahim_i3 (from Adding webhooks to a form), it would be helpful to know the following:

- What system would you like to integrate with?

- What action on that system would you like to perform?

- What Central activity would you like to use as a trigger? E.g. a new submission comes in? A new submission or edit comes in? A submission's approval status changes? Etc.

- What information from Central would you like to get when the action is triggered (e.g. just the instanceID of the new submission, the submission's data, the submission's data and approval status, the submission's data and metadata, etc)

- How close to real-time does that action actually need to be? (e.g. someone's life might be in danger if a Slack message is delayed by more than 5mins vs. a batch weekly email would be sufficient)

- What is your fault tolerance? (e.g. I need most submissions to get to the target system but dropping a few here and there is not a big deal vs. someone's life might be in danger if submissions are dropped between systems)

- What have you tried so far?

@martijnvaandering, you specifically mention PowerBI. I think the OData feed mentioned above is your best bet in that case. I don't believe PowerBI exposes a webhook endpoint.

For Teams, it would be helpful to know the answer to the questions above and specifically what Central activity you would like to use as a trigger and what ODK data you want to use within Teams.

For Slack, you could use a custom workflow to poll Central up to daily. If you need near-real-time Slack messaging, you could use a layer like I described above to poll Central and then make Slack webhook requests. I can think of at least one person doing this now and can ask them for more specifics if that would be helpful.

You also mentioned Enketo specifically and I wanted to address that. Enketo Express is a service built to work with servers that implement the OpenRosa API. If you don't want to use the OpenRosa API, you could fork Express to send to an arbitrary endpoint or build your own lightweight wrapper around Enketo Core. At this time, we are not interested in a contribution to Enketo Express to add submission to arbitrary endpoints.